TL;DR;

You probably don't need it. If possible, implement a service worker to cache front-end assets and serve up your app from a HTTP/2 supported server, but you probably don't need that either.

Recently I've been tasked to implement domain sharding on one of our client's web apps following recommendations from a well-known technical consultancy company. Domain sharding is something I've implemented a few times before, but not recently, so I wanted to research the subject again. This is the conclusion I came to.

What is it?

Domain sharding is the official name for a technique used by web developers to load resources from multiple domains/subdomains in an attempt to overcome a browser's limit on the number of concurrent requests it can make, and therefore improving load performance. The premise of this is that browsers can only make a set limit of parallel requests per hostname, as suggested in the original HTTP/1.1 specification (last updated in 1992). At first glance, this sounds great — make all the things performant!

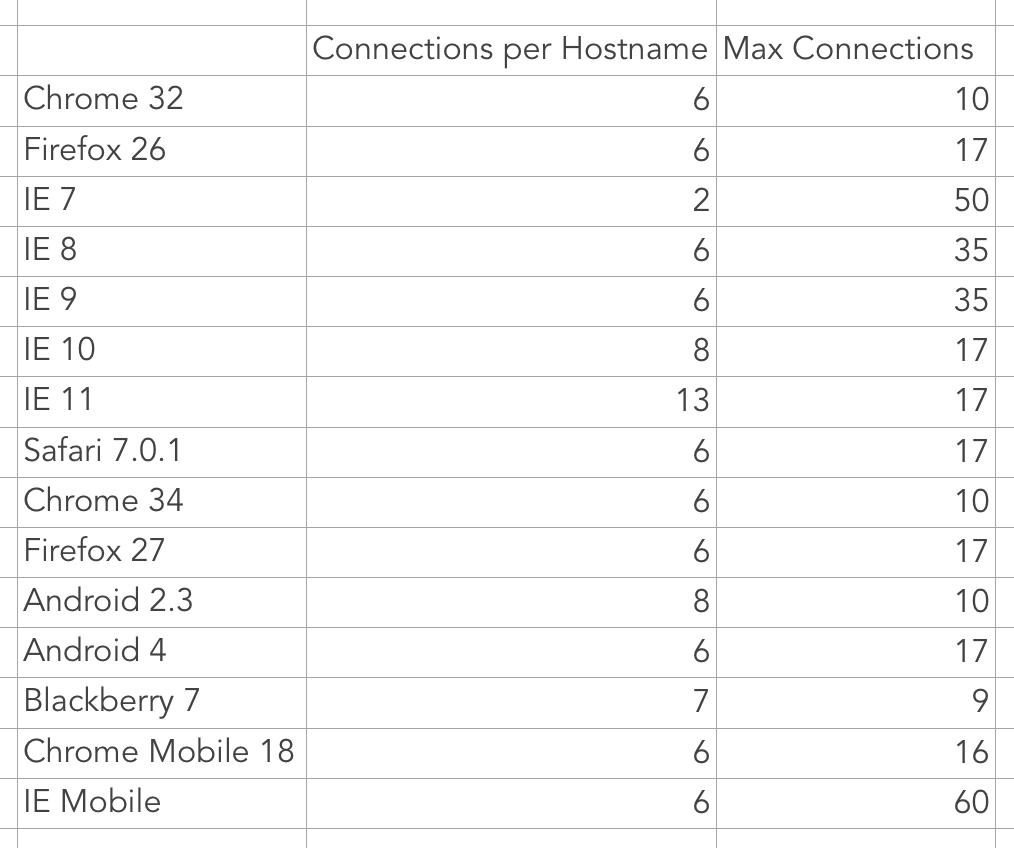

Number of concurrent requests per browser

Like all things web, finding a standard for the number of concurrent requests browsers support, is no straight forward task.

For older browsers, like IE6/7, this limit was 2 requests per hostname, but with more modern browsers the limit ranges with an average of around 6. Another interesting limit is that browsers have a total limit of concurrent connections regardless of the number of different hostnames used. This can be a major reason against domain sharding, if you consider that some browsers only support 10–17 requests max in any case.

These limits were decided on and implemented by browser vendors with much thought and experimentation to reduce the load on servers and find the optimum amount for most sites. Browser vendors cannot possibly be expected to implement a generic solution for all types of specific use cases. You as the developer would know best.

When is it useful?

The main advantage of using domain sharding is to allow blocked assets to be downloaded faster than when they get queued and downloaded sequentially. Most CDN's as well as awesome services like Cloudinary, support multiple concurrent calls, so they are geared for domain sharding. It is generally most useful for image-heavy dynamic sites (think product catalogue on e-commerce website), where these images are frequently updated and a large number of them can exist on a single page. For a long time, it has been considered a best practice for these types of sites. Is this still relevant today? Let's continue…

I want to implement domain sharding; how many subdomains should I use?

In 2007, when domain sharding was still highly recommended, Yahoo did an experiment and found that the optimum amount of subdomains were between 2 and 4, with anything more than 4 actually degrading the performance. One of the reasons for this is that each additional host requires new DNS lookups which could add 100–200ms+ to each call depending on where the DNS servers were located in relation to the user. If you are serving users globally, I would not use more than 2 subdomains.

SPDY & HTTP/2

In 2015, the Internet Engineering Task Force (IETF) released the second major version of the HTTP protocol, not surprisingly called HTTP/2. As a precursor to HTTP/2, Google wanted to scratch their own itch for a better protocol with reduced load latency and started developing the SPDY (pronounced Speedy) protocol. This set the stage for HTTP/2.

It allows for sending multiple requests for data over a single TCP connection along with a number of other benefits, including fewer header data over the wire and keeping connections open for longer, making them a lot faster without the handshake overhead. It also requires that your site is served over HTTPS.

HTTP/2 is not perfect, mostly because it needs to be backwards compatible with HTTP/1.1, but is a welcoming step in the right direction for the web. Read more it here and here.

Things to consider before implementing domain sharding

Domain sharding is very use-case specific and not a good solution for all sites. The technique is also a bit outdated now and had its glory days around 2009–2013 and before that when browsers had a much smaller limit of concurrent calls. If you are supporting only A-grade browsers for your site then concurrent requests are a much smaller problem.

The most important thing to consider, in my opinion, is the browsers your site or web app needs to support. If you need to support older versions of IE (<IE10) I would not rule out scrapping domain sharding as a possible performance improvement.

Domain sharding is not as useful for sites with mostly static assets that do not change often, because they will get cached in the browser's cache. The user will still feel the impact on the first load. You will get further by pushing down less Javascript, CSS and other assets to your users by making use of techniques like code-splitting, but that is a topic for another post.

It's also important to ensure that domain sharding isn't done randomly and assets should consistently be loaded from the same sub-domain. This is to ensure that browser caching can be better utilized, which will always be much faster than any amount of parallel downloads. E.g.: if you load an image from sub1.example.com then make sure that the image always gets loaded from sub1.example.com and not sometimes from sub2.example.com. Loading the image from different domains will actually worsen your page load performance.

Investigate where in your web app or site you are actually running into assets which are being queued because of the browser's concurrent call limitation. It might not actually be a problem. Then, first try to reduce the number of assets before deciding on using domain sharding.

If you are using a server supporting SPDY or HTTP/2 then domain sharding will most likely also hurt performance.

Final words & recommendation

My recommendation would be to implement a service worker and cache all front-end assets on the device's filesystem. This is by far the quickest method of getting assets loaded.

The fastest network request is no network request.

If it's not possible to implement a service worker because of browser support (hint: browser support is not bad) or you really want to use domain sharding, then my recommendation would be to add one additional subdomain (for front-end assets served from a CDN). This ensures that the assets are always loaded from the same host. If you have an API then that can be served from another domain.

First try and optimize the number of requests being made so you don't have unnecessary calls blocking other more important calls. Additionally, look at implementing HTTP/2 support on your server.

If you agree or disagree with anything I've written please let me know; I'm always interested in learning from others.